How Small Can You Make Your Stupid Face?

Posted on by Adam GrossmanUsing High-Falutin’ Computer Vision Algorithms for Quantitative Face Reduction Analysis

When we saw this clip from the first episode of Workaholics on Comedy Central, it made us mad:

Here you have an individual who is asked to judge which of his friends can make the smaller face, but instead of taking this task seriously he hastily declares a winner based on scant evidence and with no discernible justification!

What does it say about the human race as a tool-building species that we still — after centuries of technological progress — have no means of objectively quantifying a person’s face reduction aptitude?

Videos such as this simply perpetuate (dare we say, celebrate?) a lack of scientific rigor.

This will not stand. Jack and I knew we had to do something. So we built Tiny Face, the world’s first mobile app for scientifically determining one’s face reduction percentage.

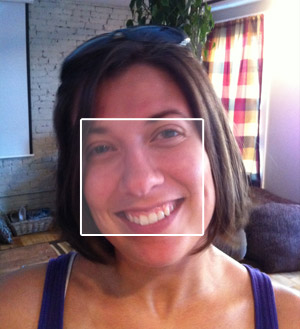

How it works is simple: The user is prompted to make their normal face followed by their tiny face, and the app then computes how much smaller the latter is compared to the former. It’s not about absolute face size, but rather how much smaller you can compress your face relative to your baseline "normal" face. Here’s an example output:

How does it work?

Face Detection

Face detection algorithms have been around for a while. We use OpenCV, an open-source library of computer vision algorithms (written in C, and easily compiled for the iPhone), to detect faces in the input image.

It’s pretty easy to use, since other people have done the hard work of training these magical algorithmic gizmos called Haar Classifiers using collections of thousands of sample faces. We simply took this classifier and fed it into OpenCV along with the input image, and out comes a bounding box around any faces! Like magic!

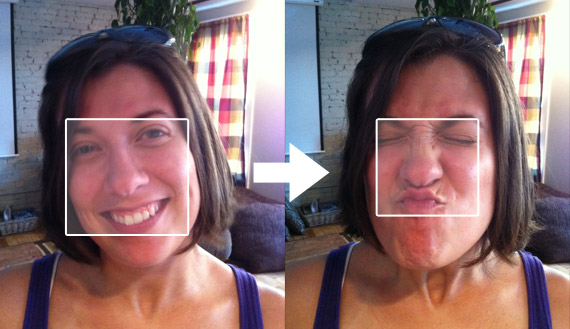

By doing this for both the normal face and the scrunched face, we get two bounding boxes whose areas we can compare to figure out how much smaller the face has become:

Not So Fast!

This method only works in tightly controlled situations where the user and camera are stationary, and the only thing that changes is the face. But if we were to turn this into an iPhone app, we’d have to deal with the real-world where we can’t expect people to hold themselves and the phone still.

The problem with simply comparing the bounding boxes of the normal and tiny faces is that moving the camera closer or father away results in unacceptable errors due to perspective scaling. For example:

We could just ask users to keep their camera and face steady. But, as any software developer can tell you, most users are terrible awful cheating sons-of-bitches (haha! We love our users!).

So how do we correct for this?

Feature Tracking

If we knew the distance from the camera to the face in both images, we could compensate for perspective. Unfortunately, there’s no way to get the absolute distance from each image alone (until the iPhone comes equipped with 3D camera or laser range-finder).

But we can compare the two images, and employ a nifty trick:

Take a look at the photos above. In the before and after images she’s changed the size of her face, but other aspects of her head / body are unaltered. These include her hairline, the shape of her head, shoulders, clothing, freckles, jewelry, etc.

If we can identify these features in both images, we can compare how their sizes and positions differ and compute the relative change in scale between them.

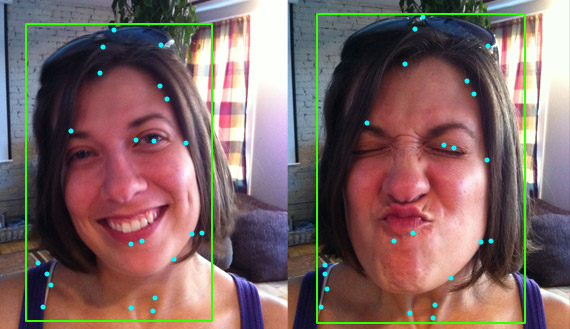

Fortunately, OpenCV comes with neat ways to identify and track features from one image to the next. Using the position of the face, we can draw a box around the likely location of the entire head and body, and then run these algorithms to find features in common:

By then comparing the distance between these points in both images, we can calculate by how much the camera has moved closer or farther away, and compensate accordingly. Presto!

Actually, while this works in theory, it doesn’t always work in practice. In our tests we found that users move around quite a bit between images, leading to inconsistent lighting, blurring, and all sorts of similar nastiness.

In fact, we weren’t able to find a single feature tracking algorithm that could handle all these situations. So we resorted to using multiple ones, including the Lucas-Kanade method and SIFT - Scale Invariant Feature Transform.

We run them all (after passing the images through various cleaning stages), and then "sanity check" the resulting numbers to determine which results are likely to be most accurate.

So there you go. After countless hours of tweaking numbers and nearly spraining our faces generating hundreds of test images, we’d got ourselves our very first iOS app!

You can check out user-submitted faces at Tiny Face website and buy the app — only 99¢! — from the App Store for the iPhone 4, iPod touch and iPad 2.

WARNING FROM YOUR MOM: Prolonged use of this app can cause your face to freeze like that, young lady.